Introduction

I got to know OpenCV as I investigated into computer vision solutions for a little startup called robodev GmbH (see here). There I developed possible ways for real-time object recognition and computation of angle and position of the recognized object. I fell in love with C++ and its possibilities to realize high-performance applications. Awhile I started digging further into Android development in Java. Due to my experience as a mobile (games) producer at Goodgame Studios, I’m driven by the possibilities and potential of mobile solutions, so plenty of awesome app-ideas get born in my brain. Now is the time I make my ideas come to life.

I wrote my first blog post about how I got into programming (see here) and how you can do that too, even when you’re not from the IT-field. In this post, we will play around with OpenCV in Android. Since I didn’t have a small use-case in mind for the vast feature-list of OpenCV I’ll keep that part simple.

For my first app idea, reading business cards could be a neat side-feature, and as I heard that OpenCV and Tesseract are a powerful team – process pictures with OpenCV and read texts with Tesseract OCR – I saw an excellent opportunity to put both into a thin app.

In this blog post, I will show you how to write that app all by yourself, step by step. You will learn to use C++-code, OpenCV and Tesseract OCR on Android.

What is OpenCV?

OpenCV is an open-source computer vision library. It has a mighty feature-set, starting with simple image processing over object-, face- and feature recognition, 3d-calibration, and visualization up to machine learning and more. There is a big community with scientists, professionals, and hobbyists maintaining a comprehensive documentation – helpful for everyone, including beginners (see here). I was more than happy realizing that it supports Android development, next to C++, C, Python, Scala, Java. Unfortunately, the inbuilt OCR is not known to be very mighty, therefore I decided to combine the strengths of OpenCV’s image processing with another library, called Tesseract (as a Marvel Fan I really like that name).

What is Tesseract OCR?

Tesseract is a free software, command line program for Linux, Windows, and next to the support of 100+ different languages, it was considered the most accurate open-source OCR available in 2006. In 2011 a brave man called Robert Theis released a fork version called “tess-two” of Tesseracts OCR Android tools, maybe not knowing that just 2 years later no one would work on Tesseract Android Tools anymore (the last commit was made 2013). Thanks to Robert Theis we can enjoy the opensource library up till today, updated, with new features and easy to use. So let’s get to work and get the setup of your Android Studio done:

OpenCV Part

Setup Android NDK

To be able to use OpenCV in its pure form we first should setup Android NDK properly, and yes… we’re gonna write C++-Code in our App 😉

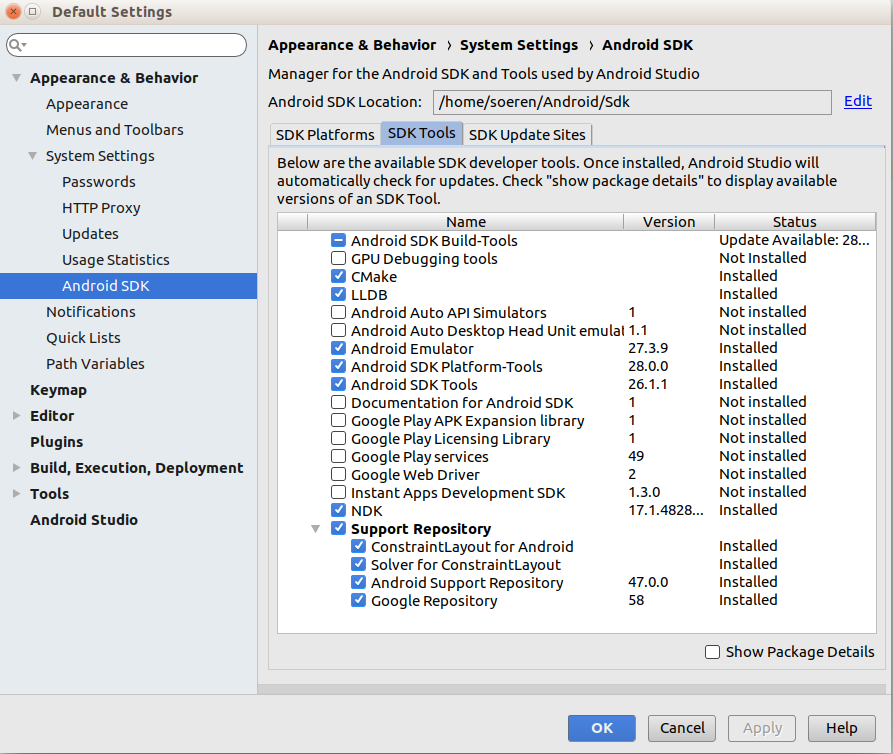

After creating a new Empty Activity Project use the Android Studio SDK Manager (Tools -> SDK Manager -> SDK Tools) for installing the necessary Tools: NDK, CMake, and LLDB. Mind the location of your SDK though – you’ll need that for the next step. After applying your changes a long download of around 700MB will start – don’t worry, that is as intended.

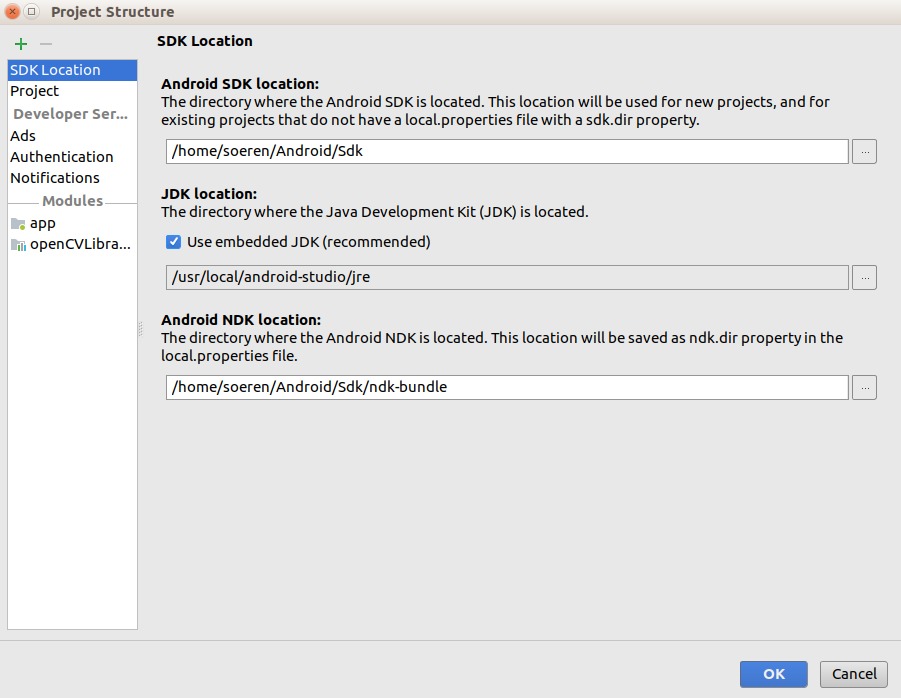

Next time you wake up from sweet dreams maybe the download is over and you can keep working. Now you need to add the location of your Android NDK. Write it into Files -> Project Structure -> SDK Location -> Android NDK location. Yes, you just saw the needed path: it is the SDK location + /ndk-bundle.

There you go. Your first step to glory is over. The next stuff is going to be a bit more tricky. OpenCV – here we come!

Setup OpenCV

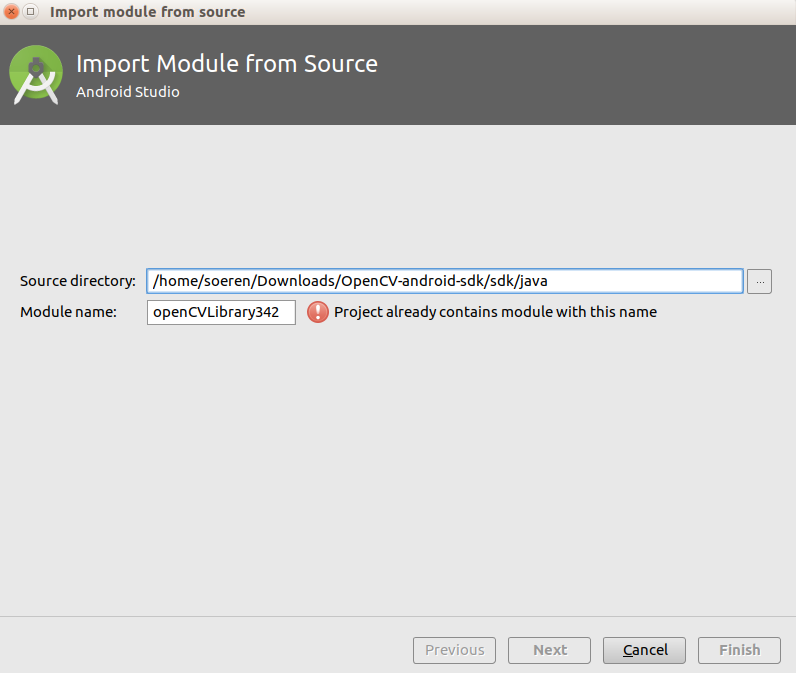

To setup OpenCV, the first step is to download the latest release of the library from their page: OpenCV Releases (at this point it’s 3.4.2). Unpack the zip, preferably into the Android SDK folder (so you have it all in one place) and import that stuff, as a module, into your project: File -> New -> Import Module. Navigate in the next dialogue that pops up to the java-folder of the just unpacked module, i.e. mine is /home/soeren/Downloads/OpenCV-android-sdk/sdk/java (on Linux). If you’re correct the name of the library will show up and it looks like the following (ignore “Project already….” in that pic):

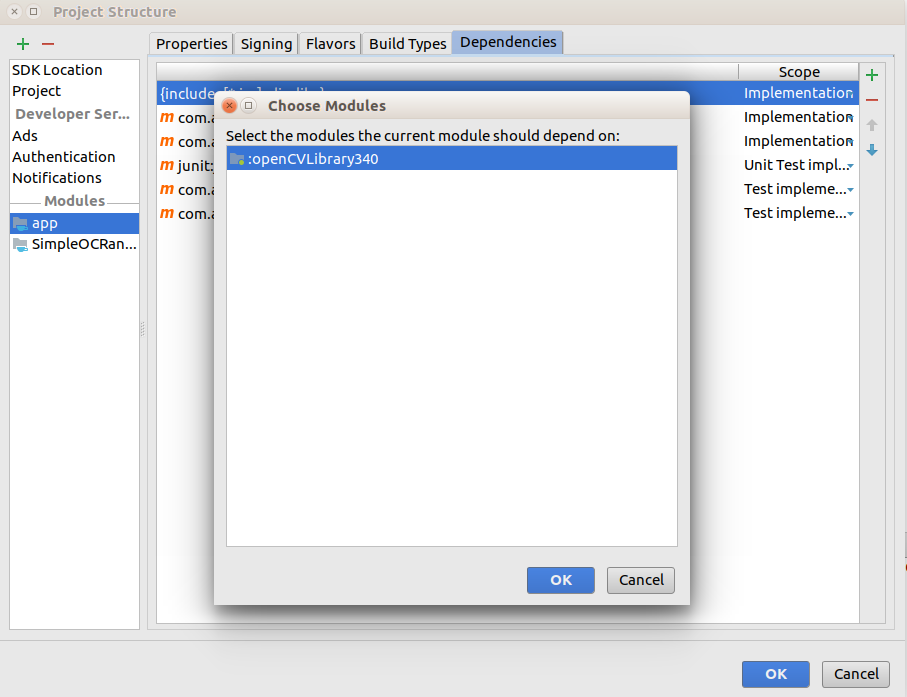

Hit “Next” and “OK” as long as they ask you stuff, keep going with the default checkmarks until you finally finish. Now, add the module as a dependency to your app. At File -> Project Structure -> app -> Dependencies -> + -> Module dependency you will find OpenCV shown in a pop-up.

Next step is to correct the build.gradle-file of the OpenCV Library (you will find it right under your app build.gradle-file). Align the fields compileSdkVersion, minSdkVersion, and targetSdkVersion, so they are matching with the build.gradle-file (app). Mine looks like this:

build.gradle (openCVLib…) for initial setup

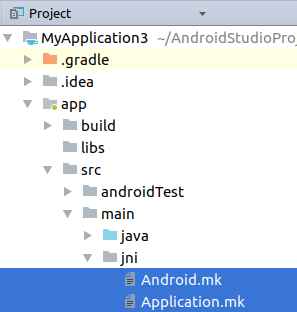

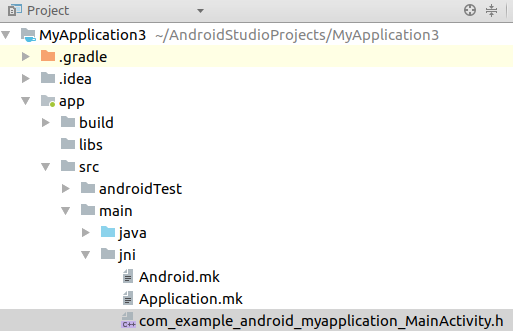

Now you create a directory in your project src/main/jni. In there you create a file called Android.mk and another called Application.mk – to tell the NDK tool to build native code.

Write the following code into the Android.mk-file:

Android.mk for NDK and OpenCV setup

Write your Android NDK path into NDK_MODULE_PATH and replace the OPENCV_ROOT according to yours. Later we will add the LOCAL_SRC_FILES name, but for now, we can leave it empty. Then modify the Application.mk-file:

Application.mk for NDK setup

Now you can tell your build.gradle-file (app) to compile C++-code to .so-files into the jniLibs folder. Do that by writing this…

build.gradle (app) File for OpenCV setup

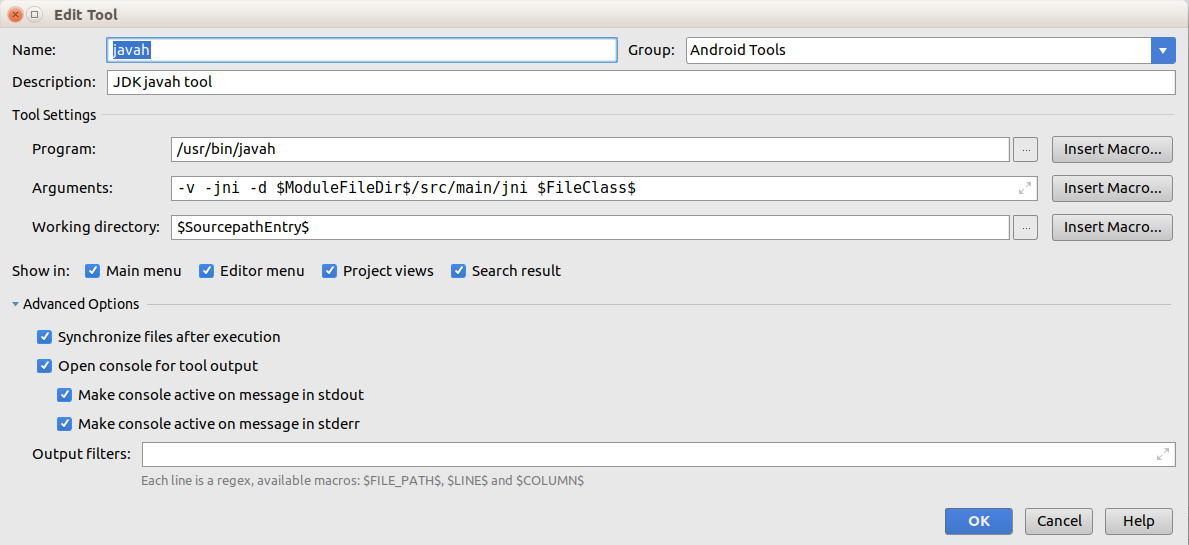

…into your build.gradle (app) file, by overwriting the default buildTypes-part. Remember to change the ndkDir value to your own. Don’t hesitate to process the gradle-sync, but if you try to build your app now you will get a wonderful error. Something like Process 'command 'PATHTONDK/ndk-build'' finished with non-zero exit value 2". This cryptic error messages did cost me quite some patience, but don’t worry. This is still as expected because we didn’t give in any LOCAL_SRC_FILES. That’s what we’re going to do now. For the sake of convenience, we’re going to use javah as an external tool. For doing so, navigate to File -> Settings -> Tools -> External Tools -> +. Add the javah tool like this:

Fill the parameter the following

* “Program”: the path to your javah installation (with Linux you can find out the path typing which javah into a terminal)

* “Arguments”: -v -jni -d $ModuleFileDir$/src/main/jni $FileClass$

* “Working directory”: $SourcepathEntry$

After successfully setting javah up, write a little test function into your MainActivity:

public static native int test();

Use the javah-tool via right-click on test()-> Android Tools -> javah and you generate a corresponding header file and can find it in your jni-folder. Copy the name of that file into your Android.mk-file to LOCAL_SRC_FILES, mine is called com_example_android_MYAPPLICATION_MainActivity.h, yours will be something close to that, I guess.

Now, run and pray that you didn’t make any path- or copy-paste-mistake. If something is not working and you get a cryptic error-message – check all the paths again, and when that doesn’t help, start over again – been there… done that… can happen to everyone! Just don’t give up. 🙂

If it builds successfully and a “Hello World!” is smiling from your Device-Screen up to you: YEAH!!! You’ve done great so far. The actual fun starts now!

Setup the Camera

To use the camera we’re going to implement some code. Initially we implement our MainActivity and its XML-structure. This is going to be a small button on an empty screen, nothing more. But there is more to come! I prepared a gist for you, where you can simply copy/paste the code into the corresponding files: MainActivity for initial setup (don’t forget to change the package name)

Our next step is to create a new empty Activity for working with the camera, let’s call it CameraActivity. Here we will call the camera and have fun with OpenCV and tess-two later, but for now, we will only call the camera. Copy the code from the following gist into the corresponding files: CameraActivity initial setup.

As you can see, we really should take care of state changes, due to the fact that the camera is pretty demanding to run constantly. New to you will be the onCameraViewStarted, onCameraViewStopped and onCameraFrame-methods. Here you can directly interact with the camera input.

In our MainActivity we will call the CameraActivity, by replacing the // do stuff here with the following code:

Intent intent = new Intent(MainActivity.this, CameraActivity.class);

startActivity(intent);

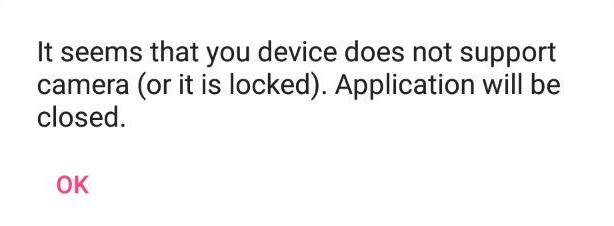

Well done! You should be able to run the app now. And if you press the button… wait!

What is this? Ahhh!! We forgot the permissions, can it be it’s the permissions?

Get the permissions for OpenCV

So for getting the permissions, we will check and if necessary ask for them in the MainActivity, when the user presses the button. Modify the MainActivity with all the uncommented code at their proper position (as shown with the comments): Permissions in MainActivity

The last step to get the permissions is to touch the AndroidManifest.xml: Manifest for camera permissions

Okay, let’s see if this was the mistake and run the app…Asking for permissions seems to be working but…

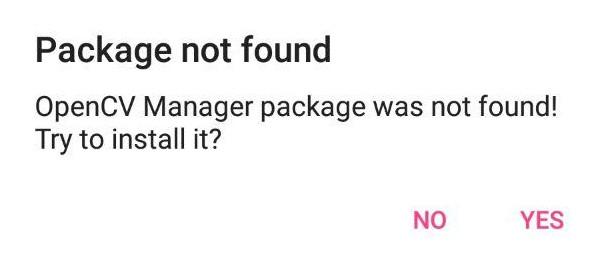

Oh come on! Nooooo!! Press “No”! We don’t need that ominous “OpenCV Manager”! We already have the whole library inside our app.

Ok ok…calm down. We can easily correct the rude OpenCV behavior:

Ignore OpenCVManager

So one of the most useless features of OpenCV is, that it checks if the device it’s running on has the OpenCV library installed, and if not, it won’t work until the OpenCV Manager App is installed. Since we put the whole library into the actual app, we don’t need that anymore, and yet, due to the fact that the library isn’t loaded completely when it checks it causes this message. With the following gist, we override the onPackageInstall-method of the BaseLoaderCallback class to prevent the app to trigger the installation. Add the following uncommented code into your CameraActivity right into the BaseLoaderCallback class:

BaseLoaderCallback-class override the onPackageInstall-method

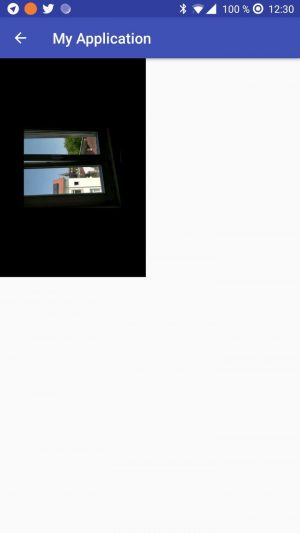

Now run the app again. If you didn’t mix up some code it should finally look like this:

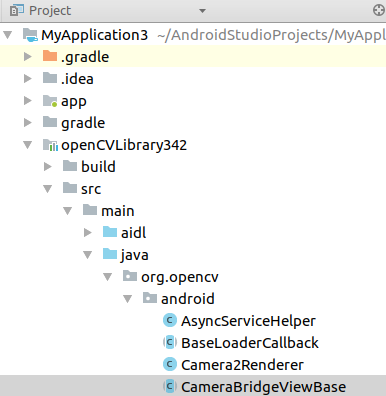

Good job! But no time to celebrate: Somehow the camera orientation and size makes you feel a bit dizzy, right? We are getting our hands dirty and change something right inside the OpenCV library to fix this one. Find the class CameraBridgeViewBase here:

Look for the method deliverAndDrawFrame and replace it with the following code: Adjusting rotation and scale of the camera

Yeah! How wonderfully straight it looks like this, right? It somehow feels like the first time we see stuff through our camera. If your camera view seems a bit too small or big, you can simply change the mScale value accordingly. If you made it till here, congratulations! Figuring out the whole procedure till here cost me around 2 days of my life! I’m glad to make sacrifices for you, though 😉 The next part is going to be much easier!

Use the OpenCV library for image processing

Let’s start putting some functionality into our app and add some more buttons to our activity_camera.xml and corresponding listeners to our CameraActivity:

Adding function buttons, listeners and call OpenCvMaker methods

Don’t mind the errors yet, we will get to those now. Create a Java class with the name OpenCvMaker.java and add the following code (and again, remember to change the package name):

Create an OpenCV manager class

Now delete the previously generated header file, from as you set up the native test() method with the javah tool.

Navigate to your OpenCvMaker class, use the javah tool on one of the native methods (i.e. makeGray(long......)) with right click -> Android Tools -> javah. A new header file will get generated. Go to this file and make the following changes:

Add methods to the header

Then, inside of the jni-folder, create a .cpp-file (without header) and call it the same as your header and add the following code to it:

Implementation of OpenCV methods in C++

And last but not least, copy the name of this .cpp-file (including the “.cpp“!!) and set it as your LOCAL_SRC_FILES inside your Android.mk-file.

Run your app and…tadaaa! Now you can play around with four of the most common functions of OpenCV. Well done!

You just learned how to use C++-code AND OpenCV functionality inside your Android app. If this is all you wanted to learn, give yourself a break and lean back. Give your beloved ones a hug and tell them, you did it! Also, give yourself a break when this took you too long and your computer is smoking from all the building processes. In times like these, during the hot summer, I prefer to put my laptop into the fridge to give it a little chill 🙂

Tess-Two Part

Now that we had a wonderful bath in a jacuzzi full of self-esteem, we can go back to work. There is one little part missing: The OCR! Don’t worry, I will make it quick.

First, add the following dependency to your build.gradle (app)-file and trigger the gradle-sync:

implementation 'com.rmtheis:tess-two:9.0.0'

Tess-two is now available and ready to use. Add the HUD components to activity_camera.xml and some functionality to CameraActivity.java with the following modifications:

Read-button and textview for CameraActivity

Create a new Java class with the name MyTessOCR.java and put the following code into it (don’t forget to change the package!):

Implementing the tess-two class

Let’s use it in the CameraActivity, and make some changes in the CameraActivity. Since computation of the OCR could be process-demanding, we will call it in an extra thread. Also, you will be asked to import the android.graphics.Matrix, continue and confirm. This is only for rotating the input signal of the camera according to the preview, that we’ve rotated inside the OpenCV library already. Do the following: Call MyTessOCR class from CameraActivity

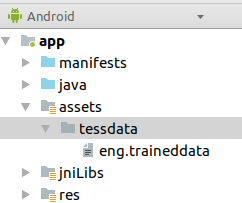

Tesseract, and therefore tess-two, needs traineddata to know how and what to recognize. Since we are using tess-two, which is a fork of an older Tesseract version, we cannot just use any traineddata that is available in the tesseract git repository. Download the following traineddata and you will be fine: Download eng.traineddata. Create an asset-folder via right-click on Java -> New -> Folder -> Asset Folder, then create a directory called tessdata and finally copy the downloaded eng.traineddata-file into that directory. When you’re done it should like this:

Next, you need to make sure that this file is also put onto the device. Therefore we need to add a little method to our CameraActivity and call it in the onCreate method: Add preprateTessData-method to CameraActivity

Alright! The last thing to do is to get the permissions for read and write access on the external storage since we are doing that with our previously added method. Go and add the following checks and constants to the MainActivity and additional permissions to the AndroidManifest.xml:

Ask for external storage read and write permissions

Okay! Breath in. Breath out. You did it! Build (can take a while), run and play 🙂 Hold your phone in front of the text below and press READ IT! in your app.

Find my original Git repo of the app here