In this blog post I describe my experiences with AI services from Microsoft and how we (team Billige Plätze) were able to create hackathon award winning prototypes in roughly 24 hours with them. The post is structured according to the hackathons we participated and used AI services in, as of now (11.06.2018) there are 2 hackathons where we used AI services, but im sure there are a lot more to come in the future. The first one was the BlackForest Hackathon which took place in autumn 2017 in Offenburg and the second one was the Zeiss hackathon in Munich, which took place in January 2018.

This post is not intended to be a guide on integrating said services (Microsoft has nice documentations of all their products 🙂 ), but rather a field report on how these services can be used to actualize cool use cases.

Artificial Intelligence as a Service (AIaaS) is third-party offering of artificial intelligence, accessible via a API. So, people get to take advantage of AI without spending too much money, or if you’re lucky and a student, no money at all.

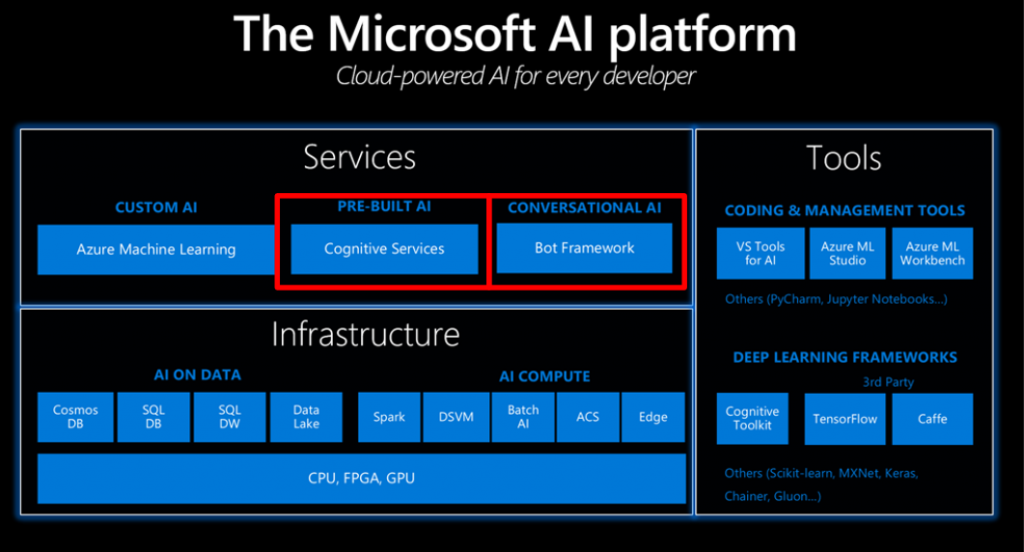

The Microsoft AI Platform

As already mentioned above, we have used several Microsoft services to build our prototypes, including several Microsoft Cognitive Services and the Azure bot service. All of them are part of the Microsoft AI platform, where you can find services, infrastructure and tools for Machine Learning and Artificial Intelligence you can use for your personal or business projects.

we used only some of the services of the AI platform

BlackForest Hackathon

The BlackForest Hackathon has been the first hackathon ever for our team and we have been quite excited to participate.

The theme proposed by the organizers of the hackathon was “your personal digital assistant”, and after some time brainstorming we came up with the idea of creating an intelligent bot, which assists you with creating your learning schedule. Most of us are serious procrastinators (including me :P), so we thought that such a bot can help us stick to our learning schedule and motivate us along the way to the exam.

The features we wanted to implement for our prototype are

- asking the user about his habits (usual breakfast, lunch and dinner time as well as sleep schedule),

- asking the user about his due exams (lecture, date and the amount of time the user wants to learn for the exam) and

- automatic creation of learning appointments, based on the user input, within the users Google calendar.

With the topic of the hackathon in mind we wanted the bot to gather the user input via a dialog as natural as possible.

Billige Plätze in Action

The technology stack

Cem and I have experimented a little bit with the Azure Bot Service before the hackathon, and we thought it to be a perfect match for the task of writing the bot. We also wanted the bot to process natural language and stumbled upon LUIS, a machine learning based service for natural language processing, which can be integrated seamlessly into the bot framework (because it is from Microsoft, too).

Our stack consisted of, as expected, mainly Microsoft technologies. We used

- C#,

- .Net core,

- Visual Studio,

- Azure Bot Service,

- LUIS and

- Azure.

The combination of C#, the bot service and LUIS provided the core functionality of our bot and we were able to deploy it to Azure within one click.

The Azure Bot Service

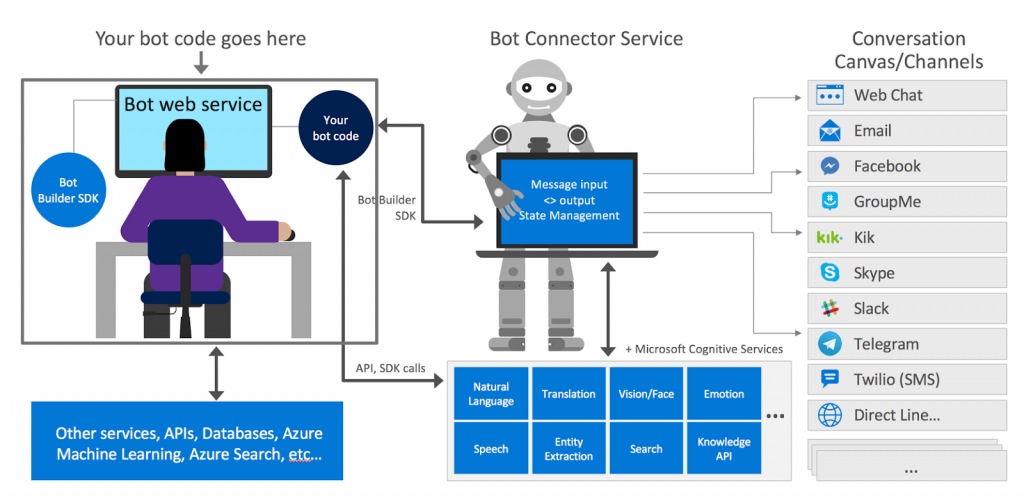

The Bot Service provides an integrated environment that is purpose-built for bot development, enabling you to build, connect, test, deploy, and manage intelligent bots, all from one place. Bot Service leverages the Bot Builder SDK with support for .NET and Node.js.

Overview of the Bot Service

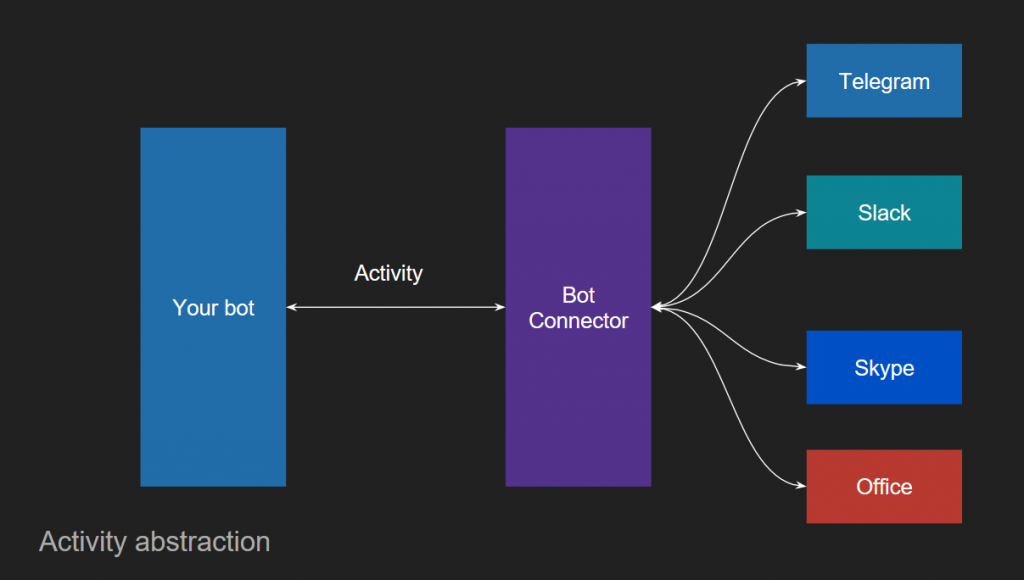

The bot service consists of the concepts of

- channels, which connect the bot service with a messaging platform of your choice,

- the bot connector, which connects your actual bot code with one or more channels and handles the message exchange between the channels and the bot via

- activity objects.

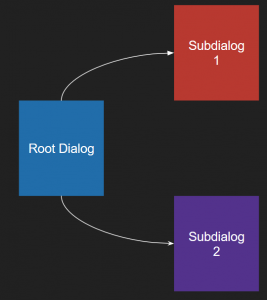

Dialogs, another core concept, help organize the logic in your bot and manage conversation flow. Dialogs are arranged in a stack, and the top dialog in the stack processes all incoming messages until it is closed or a different dialog is invoked.

General Conversation flow of the Bot Service

By using the bot service we were able to focus on programming the actual conversation with the Bot Builder SDK. To use the bot service you just have to create a new bot in Azure, and connect to the channels of your choice (Telegram worked like a charm) also via the web app.

After creating your bot in Azure you can start coding right away by using a template provided by Visual Studio, you just have to type in your bot credentials in the configuration file and your good to go. Because we didn’t have to worry about where to host the bot and how to set it up we were able to quickly create a series of dialogs (which involved serious copy pasting :P) and test our conversation flow right away by using the botframework emulator, and when we were happy with the results publish the bot on Azure within one click in Visual Studio.

We didn’t have to worry about getting the user input from the messaging platform and integrating natural language understanding into our bot was very easy, because we used Microsoft LUIS. We were seriously impressed by the simplicity of the bot service.

Microsoft LUIS

LUIS is a machine learning-based service to build natural language into apps, bots, and IoT devices.

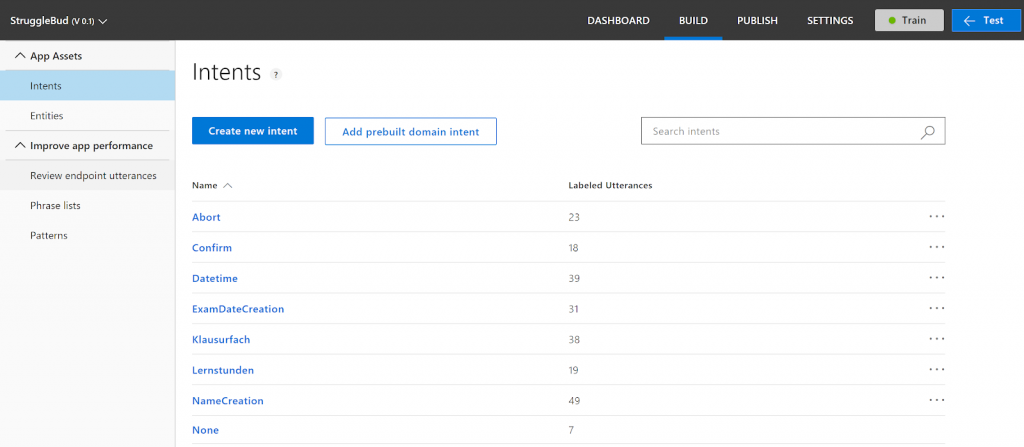

You can create your own LUIS app on the LUIS website. A LUIS app is basically a language model designed by you, specific for your domain. Your model is composed of utterances, intents and entities, whereas utterances are example phrases users could type into your bot, intents are the users intention you want to extract from the utterance and entities represent relevant information of the utterance, much like variables in a programming language.

An example for an utterance from our bot could be “I write my exam on the 23rd of August 2018”. Based on the utterance, our model is able to extract the intent “ExamDateCreation” as well as the entity “Date” <– 23.08.2018 (for a more detailed explanation, visit the LUIS Documentation). Once you define all your intents and entities needed for your domain in the LUIS web application and provide enough sample utterances, you can test and publish your LUIS app. After publishing you can access your app via a REST API, or in our case through the Azure bot service.

the LUIS web application

LUIS is tightly integrated in the bot service, to integrate our model all we had to do was to add an annotation to a class and extend the prefabricated LuisDialog to get access to our intents and entities.

[Serializable]

[LuisModel("your-application-id", "your-api-key")]

public class ExamSubjectLuisDialog : LuisDialog<object>

{

[LuisIntent("Klausurfach")]

private async Task ExamPowerInput(IDialogContext context, IAwaitable<object> result,

Microsoft.Bot.Builder.Luis.Models.LuisResult luisResult)

{

...

}

}

Theres nothing else to do to integrate LUIS into your bot. The bot connector service handles the message exchange between your bot code and the LUIS REST API and converts the JSON into C# objects you can directly use. Fun fact: the integration of the google calendar into our bot took us several hours, a lot of nerves and around 300 lines of code, whereas the integration of our LUIS model took around 5 minutes and the lines of code for every LuisDialog we created.

Summary

By using the Azure bot service in combination with a custom LUIS model we were able to create a functional prototype of a conversational bot assisting you in creating your custom learning schedule by adding appointments to your Google calendar in roughly 24 hours, all while being able to understand and process natural language. With the power of the bot service, the bot is available on a number of channels, including Telegram, Slack, Facebook Messenger and Cortana.

It was a real pleasure to use these technologies because they work seamlessly together. Being able to use ready-to-use dialogs for LUIS sped up the development process enormously, as well as being able to deploy the bot on azure within one click out of Visual Studio. I included a little demonstration video of the bot below, because it is no longer in operation.

Zeiss Hackathon Munich

Our second Hackathon we participated in took place in January 2018. It was organized by Zeiss and sponsored by Microsoft.

The theme of this hackathon was VISIONary Ideas wanted, and most of the teams did something with VR/AR. One of the special guests of the hackathon was a teenager with a congenital defect resulting in him to be left with only about 10% of his eye sight. The question asked was “can AI improve his life?”, so we sat down with him and asked him about his daily struggle being almost blind. One problem he faces regularly, and also according to our internet research other blind people, is the withdrawal of cash from an ATM.

So after some time brainstorming we came up with the idea of a simple Android app which guides you through the withdrawal process via auditory guidance. Almost everyone has a smartphone, and the disability support for them is actually pretty good, so a simple app is a pretty good option for a little, accessible life improvement.

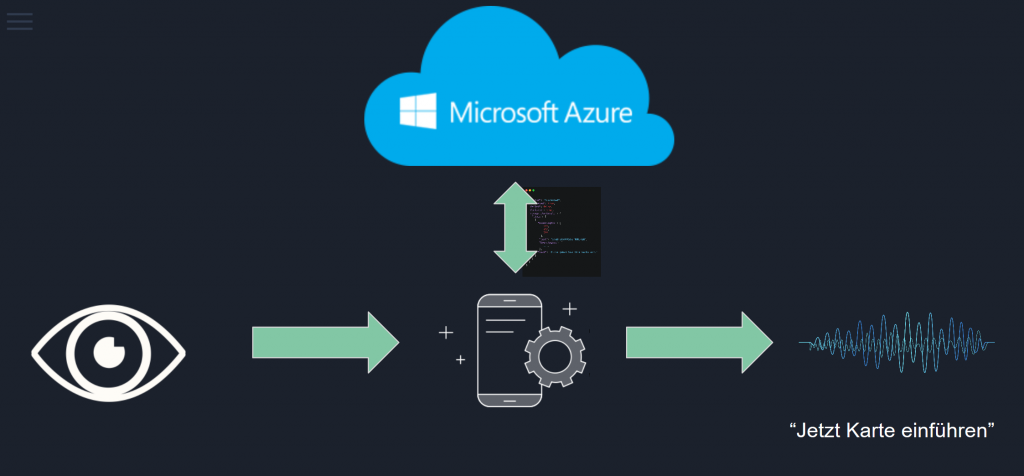

We figured out 3 essential things we needed to develop our app:

1. Image Classification, to distinguish between different types of ATM (for our prototype we focused on differentiating ATMs with touch screen and ATMs with buttons on the sides),

2. Optical Character Recognition, to read the text on the screen of the ATM to detect the stage of the withdrawal process the user is in and generate auditory commands via

3. Text to Speech, which comes out of the box in Android.

The Technology Stack

We wanted to develop an Android app, so we used Android in combination with Android Studio. We chose to develop an Android app simply because some of us were familiar with it and I always wanted to do something with Android.

For both the image classification and the OCR we again relied on Microsoft Services.

The Microsoft Vision API provides a REST endpoint for OCR, so we got ourselves a API key and we were ready to go.

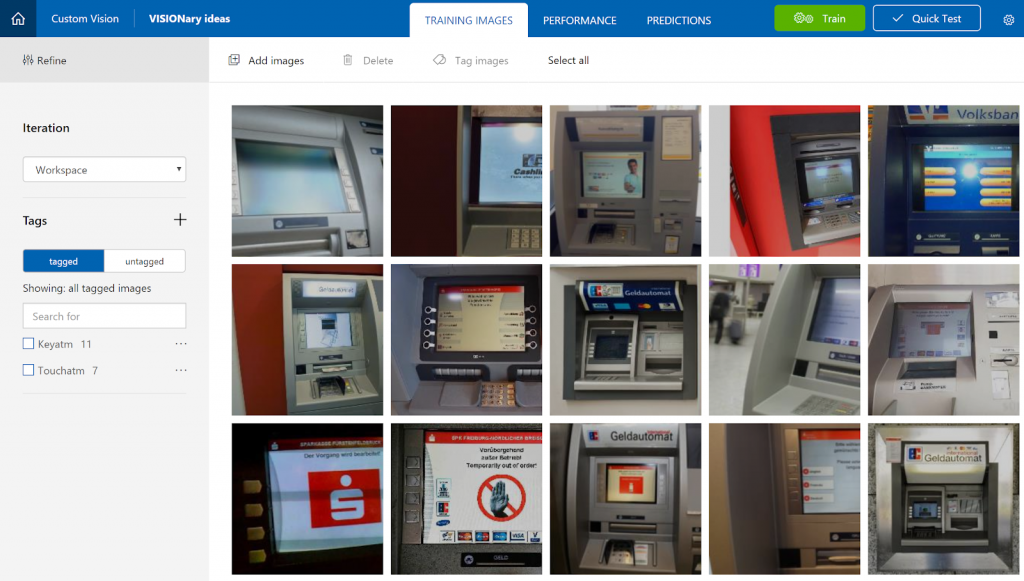

For the image classification Microsoft provides the custom vision service, where you can train your own model.

Plugging the parts together

The flow of our app is quite simple, it takes a picture every 5 seconds, converts it into a byte array and first sends it to our custom image classifier to detect the ATM type. After the succesful classification of the ATM all further taken images are sent to the vision API for optical character recognition. We get a JSON as a response with all the text the vision API was able to extract from the image. The app then matches keywords, we focused on the flow of ATMs from Sparkasse, with the extracted text to detect the current stage of the withdrawal process and then create auditory commands via text to speech. We didn’t even have to rely on frameworks by Microsoft like in the first hackathon, all we needed was to call the REST API of the services and process the response.

Summary

Much like in the first hackathon we were really impressed how good the services work out of the box. As you can imagine the images of the ATM were oftentimes pretty shaky, but the OCR worked pretty good nevertheless. And because we only matched important, but distinct, keywords of every stage of the withdrawal process, the app could handle wrongly extracted sentences to a good degree. Our custom image classifier was able to differentiate between ATMs with touch screens and ATMs with buttons pretty reliable with only 18(!) sample pictures.

our custom ATM type classifier

After the 24 hours of coding (-2 hours of sleep :/ ), we had a functioning prototype and were able to pitch our idea to the jury with a “living ATM” played by Danny :).

The jury was impressed by the prototype and our idea of a “analog” pitch (we had no PowerPoint at all) and awarded us the Microsoft price. We all got a brand new Xbox One X, a book from Satya Nadalla and an IoT kit, which was pretty cool (sorry for bragging :P).

Billige Plätze and their new Xboxes

Takeaways

I think there are 3 main points you can take away from this blog post and my experiences with AI as a service.

- You can create working prototypes in less than 24 hours

- You don’t have to start from scratch

- It is not a shame to use finished models. When you need more, there is always time!

Before using these services I thought AI to be very time-consuming and difficult to get right. But we were able to create working prototypes in less than 24 hours! The models provided by Microsoft are very good and you can integrate them seamlessly into your application, be it in your conversational bot or in your Android App.

All projects are available in our GitHub organization, but beware, the code is very dirty, as usual for hackathons!

I hope I was able to inspire you to create your own application using AI services, and take away some of your fears of the whole AI and Machine Learning thing.

Have fun writing your own application and see you in our next blog post!